- Introduction

- Crypto

- Node Info

- Protocol Packet

- DHT

- LAN discovery

- Messenger

- TCP client

- TCP connections

- TCP server

- Friend connection

- Friend requests

- Group

- DHT Group Chats

- DHT Group Chats Packet Protocols

- Full Packet Structure

- Handshake packet payloads

- Lossy Packet Payloads

- Lossless Packet Payloads

- CUSTOM_PRIVATE_PACKET (0xee)

- FRAGMENT (0xef)

- KEY_ROTATIONS (0xf0)

- TCP_RELAYS (0xf1)

- CUSTOM_PACKETS (0xf2)

- BROADCAST (0xf3)

- PEER_INFO_REQUEST (0xf4)

- PEER_INFO_RESPONSE (0xf5)

- INVITE_REQUEST (0xf6)

- INVITE_RESPONSE (0xf7)

- SYNC_REQUEST (0xf8)

- SYNC_RESPONSE (0xf9)

- TOPIC (0xfa)

- SHARED_STATE (0xfb)

- MOD_LIST (0xfc)

- SANCTIONS_LIST (0xfd)

- FRIEND_INVITE (0xfe)

- HS_RESPONSE_ACK (0xff)

- Net crypto

- network.txt

- Onion

- Ping array

- State Format

Introduction

This document is a textual specification of the Tox protocol and all the supporting modules required to implement it. The goal of this document is to give enough guidance to permit a complete and correct implementation of the protocol.

Objectives

This section provides an overview of goals and non-goals of Tox. It provides the reader with:

-

a basic understanding of what problems Tox intends to solve;

-

a means to validate whether those problems are indeed solved by the protocol as specified;

-

the ability to make better tradeoffs and decisions in their own reimplementation of the protocol.

Goals

-

Authentication: Tox aims to provide authenticated communication. This means that during a communication session, both parties can be sure of the other party’s identity. Users are identified by their public key. The initial key exchange is currently not in scope for the Tox protocol. In the future, Tox may provide a means for initial authentication using a challenge/response or shared secret based exchange.

If the secret key is compromised, the user’s identity is compromised, and an attacker can impersonate that user. When this happens, the user must create a new identity with a new public key.

-

End-to-end encryption: The Tox protocol establishes end-to-end encrypted communication links. Shared keys are deterministically derived using a Diffie-Hellman-like method, so keys are never transferred over the network.

-

Forward secrecy: Session keys are re-negotiated when the peer connection is established.

-

Privacy: When Tox establishes a communication link, it aims to avoid leaking to any third party the identities of the parties involved (i.e. their public keys).

Furthermore, it aims to avoid allowing third parties to determine the IP address of a given user.

-

Resilience:

-

Independence of infrastructure: Tox avoids relying on servers as much as possible. Communications are not transmitted via or stored on central servers. Joining a Tox network requires connecting to a well-known node called a bootstrap node. Anyone can run a bootstrap node, and users need not put any trust in them.

-

Tox tries to establish communication paths in difficult network situations. This includes connecting to peers behind a NAT or firewall. Various techniques help achieve this, such as UDP hole-punching, UPnP, NAT-PMP, other untrusted nodes acting as relays, and DNS tunnels.

-

Resistance to basic denial of service attacks: short timeouts make the network dynamic and resilient against poisoning attempts.

-

-

Minimum configuration: Tox aims to be nearly zero-conf. User-friendliness is an important aspect to security. Tox aims to make security easy to achieve for average users.

Non-goals

-

Anonymity is not in scope for the Tox protocol itself, but it provides an easy way to integrate with software providing anonymity, such as Tor.

By default, Tox tries to establish direct connections between peers; as a consequence, each is aware of the other’s IP address, and third parties may be able to determine that a connection has been established between those IP addresses. One of the reasons for making direct connections is that relaying real-time multimedia conversations over anonymity networks is not feasible with the current network infrastructure.

Threat model

TODO(iphydf): Define one.

Data types

All data types are defined before their first use, and their binary protocol representation is given. The protocol representations are normative and must be implemented exactly as specified. For some types, human-readable representations are suggested. An implementation may choose to provide no such representation or a different one. The implementation is free to choose any in-memory representation of the specified types.

Binary formats are specified in tables with length, type, and content

descriptions. If applicable, specific enumeration types are used, so

types may be self-explanatory in some cases. The length can be either a

fixed number in bytes (e.g. 32), a number in bits (e.g. 7 bit), a

choice of lengths (e.g. 4 / 16), or an inclusive range (e.g.

[0, 100]). Open ranges are denoted [n,] to mean a minimum length of

n with no specified maximum length.

Integers

The protocol uses four bounded unsigned integer types. Bounded means they have an upper bound beyond which incrementing is not defined. The integer types support modular arithmetic, so overflow wraps around to zero. Unsigned means their lower bound is 0. Signed integer types are not used. The binary encoding of all integer types is a fixed-width byte sequence with the integer encoded in Big Endian unless stated otherwise.

| Type name | C type | Length | Upper bound |

|---|---|---|---|

| Word8 | uint8_t |

1 | 255 (0xff) |

| Word16 | uint16_t |

2 | 65535 (0xffff) |

| Word32 | uint32_t |

4 | 4294967295 (0xffffffff) |

| Word64 | uint64_t |

8 | 18446744073709551615 (0xffffffffffffffff) |

Strings

A String is a data structure used for human readable text. Strings are

sequences of glyphs. A glyph consists of one non-zero-width unicode code

point and zero or more zero-width unicode code points. The

human-readable representation of a String starts and ends with a

quotation mark (") and contains all human-readable glyphs verbatim.

Control characters are represented in an isomorphic human-readable way.

I.e. every control character has exactly one human-readable

representation, and a mapping exists from the human-readable

representation to the control character. Therefore, the use of Unicode

Control Characters (U+240x) is not permitted without additional marker.

Crypto

The Crypto module contains all the functions and data types related to cryptography. This includes random number generation, encryption and decryption, key generation, operations on nonces and generating random nonces.

Key

A Crypto Number is a large fixed size unsigned (non-negative) integer.

Its binary encoding is as a Big Endian integer in exactly the encoded

byte size. Its human-readable encoding is as a base-16 number encoded as

String. The NaCl implementation

libsodium supplies the

functions sodium_bin2hex and sodium_hex2bin to aid in implementing

the human-readable encoding. The in-memory encoding of these crypto

numbers in NaCl already satisfies the binary encoding, so for

applications directly using those APIs, binary encoding and decoding is

the identity

function.

Tox uses four kinds of Crypto Numbers:

| Type | Bits | Encoded byte size |

|---|---|---|

| Public Key | 256 | 32 |

| Secret Key | 256 | 32 |

| Combined Key | 256 | 32 |

| Nonce | 192 | 24 |

Key Pair

A Key Pair is a pair of Secret Key and Public Key. A new key pair is

generated using the crypto_box_keypair function of the NaCl crypto

library. Two separate calls to the key pair generation function must

return distinct key pairs. See the NaCl

documentation for details.

A Public Key can be computed from a Secret Key using the NaCl function

crypto_scalarmult_base, which computes the scalar product of a

standard group element and the Secret Key. See the NaCl

documentation for details.

Combined Key

A Combined Key is computed from a Secret Key and a Public Key using the

NaCl function crypto_box_beforenm. Given two Key Pairs KP1 (SK1, PK1)

and KP2 (SK2, PK2), the Combined Key computed from (SK1, PK2) equals the

one computed from (SK2, PK1). This allows for symmetric encryption, as

peers can derive the same shared key from their own secret key and their

peer’s public key.

In the Tox protocol, packets are encrypted using the public key of the receiver and the secret key of the sender. The receiver decrypts the packets using the receiver’s secret key and the sender’s public key.

The fact that the same key is used to encrypt and decrypt packets on both sides means that packets being sent could be replayed back to the sender if there is nothing to prevent it.

The shared key generation is the most resource intensive part of the encryption/decryption which means that resource usage can be reduced considerably by saving the shared keys and reusing them later as much as possible.

Nonce

A random nonce is generated using the cryptographically secure random

number generator from the NaCl library randombytes.

A nonce is incremented by interpreting it as a Big Endian number and adding 1. If the nonce has the maximum value, the value after the increment is 0.

Most parts of the protocol use random nonces. This prevents new nonces from being associated with previous nonces. If many different packets could be tied together due to how the nonces were generated, it might for example lead to tying DHT and onion announce packets together. This would introduce a flaw in the system as non friends could tie some people’s DHT keys and long term keys together.

Box

The Tox protocol differentiates between two types of text: Plain Text and Cipher Text. Cipher Text may be transmitted over untrusted data channels. Plain Text can be Sensitive or Non Sensitive. Sensitive Plain Text must be transformed into Cipher Text using the encryption function before it can be transmitted over untrusted data channels.

The encryption function takes a Combined Key, a Nonce, and a Plain Text,

and returns a Cipher Text. It uses crypto_box_afternm to perform the

encryption. The meaning of the sentence “encrypting with a secret key, a

public key, and a nonce” is: compute a combined key from the secret key

and the public key and then use the encryption function for the

transformation.

The decryption function takes a Combined Key, a Nonce, and a Cipher

Text, and returns either a Plain Text or an error. It uses

crypto_box_open_afternm from the NaCl library. Since the cipher is

symmetric, the encryption function can also perform decryption, but will

not perform message authentication, so the implementation must be

careful to use the correct functions.

crypto_box uses xsalsa20 symmetric encryption and poly1305

authentication.

The create and handle request functions are the encrypt and decrypt functions for a type of DHT packets used to send data directly to other DHT nodes. To be honest they should probably be in the DHT module but they seem to fit better here. TODO: What exactly are these functions?

Node Info

Transport Protocol

A Transport Protocol is a transport layer protocol directly below the Tox protocol itself. Tox supports two transport protocols: UDP and TCP. The binary representation of the Transport Protocol is a single bit: 0 for UDP, 1 for TCP. If encoded as standalone value, the bit is stored in the least significant bit of a byte. If followed by other bit-packed data, it consumes exactly one bit.

The human-readable representation for UDP is UDP and for TCP is TCP.

Host Address

A Host Address is either an IPv4 or an IPv6 address. The binary representation of an IPv4 address is a Big Endian 32 bit unsigned integer (4 bytes). For an IPv6 address, it is a Big Endian 128 bit unsigned integer (16 bytes). The binary representation of a Host Address is a 7 bit unsigned integer specifying the address family (2 for IPv4, 10 for IPv6), followed by the address itself.

Thus, when packed together with the Transport Protocol, the first bit of the packed byte is the protocol and the next 7 bits are the address family.

Port Number

A Port Number is a 16 bit number. Its binary representation is a Big Endian 16 bit unsigned integer (2 bytes).

Socket Address

A Socket Address is a pair of Host Address and Port Number. Together with a Transport Protocol, it is sufficient information to address a network port on any internet host.

Node Info (packed node format)

The Node Info data structure contains a Transport Protocol, a Socket Address, and a Public Key. This is sufficient information to start communicating with that node. The binary representation of a Node Info is called the “packed node format”.

| Length | Type | Contents |

|---|---|---|

1 bit |

Transport Protocol | UDP = 0, TCP = 1 |

7 bit |

Address Family | 2 = IPv4, 10 = IPv6 |

4 / 16 |

IP address | 4 bytes for IPv4, 16 bytes for IPv6 |

2 |

Port Number | Port number |

32 |

Public Key | Node ID |

The packed node format is a way to store the node info in a small yet

easy to parse format. To store more than one node, simply append another

one to the previous one: [packed node 1][packed node 2][...].

In the packed node format, the first byte (high bit protocol, lower 7 bits address family) are called the IP Type. The following table is informative and can be used to simplify the implementation.

| IP Type | Transport Protocol | Address Family |

|---|---|---|

2 (0x02) |

UDP | IPv4 |

10 (0x0a) |

UDP | IPv6 |

130 (0x82) |

TCP | IPv4 |

138 (0x8a) |

TCP | IPv6 |

The number 130 is used for an IPv4 TCP relay and 138 is used to

indicate an IPv6 TCP relay.

The reason for these numbers is that the numbers on Linux for IPv4 and

IPv6 (the AF_INET and AF_INET6 defines) are 2 and 10. The TCP

numbers are just the UDP numbers + 128.

Protocol Packet

A Protocol Packet is the top level Tox protocol element. All other packet types are wrapped in Protocol Packets. It consists of a Packet Kind and a payload. The binary representation of a Packet Kind is a single byte (8 bits). The payload is an arbitrary sequence of bytes.

| Length | Type | Contents |

|---|---|---|

1 |

Packet Kind | The packet kind identifier |

[0,] |

Bytes | Payload |

These top level packets can be transported in a number of ways, the most common way being over the network using UDP or TCP. The protocol itself does not prescribe transport methods, and an implementation is free to implement additional transports such as WebRTC, IRC, or pipes.

In the remainder of the document, different kinds of Protocol Packet are specified with their packet kind and payload. The packet kind is not repeated in the payload description (TODO: actually it mostly is, but later it won’t).

Inside Protocol Packets payload, other packet types can specify

additional packet kinds. E.g. inside a Crypto Data packet (0x1b), the

Messenger module defines its protocols for messaging, file

transfers, etc. Top level Protocol Packets are themselves not encrypted,

though their payload may be.

Packet Kind

The following is an exhaustive list of top level packet kind names and their number. Their payload is specified in dedicated sections. Each section is named after the Packet Kind it describes followed by the byte value in parentheses, e.g. Ping Request (0x00).

| Byte value | Packet Kind |

|---|---|

0x00 |

Ping Request |

0x01 |

Ping Response |

0x02 |

Nodes Request |

0x04 |

Nodes Response |

0x18 |

Cookie Request |

0x19 |

Cookie Response |

0x1a |

Crypto Handshake |

0x1b |

Crypto Data |

0x20 |

DHT Request |

0x21 |

LAN Discovery |

0x80 |

Onion Request 0 |

0x81 |

Onion Request 1 |

0x82 |

Onion Request 2 |

0x83 |

Announce Request |

0x84 |

Announce Response |

0x85 |

Onion Data Request |

0x86 |

Onion Data Response |

0x8c |

Onion Response 3 |

0x8d |

Onion Response 2 |

0x8e |

Onion Response 1 |

0xf0 |

Bootstrap Info |

DHT

The DHT is a self-organizing swarm of all nodes in the Tox network. A node in the Tox network is also called a “Tox node”. When we talk about “peers”, we mean any node that is not the local node (the subject). This module takes care of finding the IP and port of nodes and establishing a route to them directly via UDP using hole punching if necessary. The DHT only runs on UDP and so is only used if UDP works.

Every node in the Tox DHT has an ephemeral Key Pair called the DHT Key Pair, consisting of the DHT Secret Key and the DHT Public Key. The DHT Public Key acts as the node address. The DHT Key Pair is renewed every time the Tox instance is closed or restarted. An implementation may choose to renew the key more often, but doing so will disconnect all peers.

The DHT public key of a friend is found using the onion module. Once the DHT public key of a friend is known, the DHT is used to find them and connect directly to them via UDP.

Distance

A Distance is a positive integer. Its human-readable representation is a

base-16 number. Distance (type) is an ordered

monoid with the

associative binary operator + and the identity element 0.

The DHT uses a

metric to

determine the distance between two nodes. The Distance type is the

co-domain of this metric. The metric currently used by the Tox DHT is

the XOR of the nodes’ public keys: distance(x, y) = x XOR y. For

this computation, public keys are interpreted as Big Endian integers

(see Crypto Numbers).

When we speak of a “close node”, we mean that its Distance to the node under consideration is small compared to the Distance to other nodes.

An implementation is not required to provide a Distance type, so it has no specified binary representation. For example, instead of computing a distance and comparing it against another distance, the implementation can choose to implement Distance as a pair of public keys and define an ordering on Distance without computing the complete integral value. This works, because as soon as an ordering decision can be made in the most significant bits, further bits won’t influence that decision.

XOR is a valid metric, i.e. it satisfies the required conditions:

-

Non-negativity

distance(x, y) >= 0: Since public keys are Crypto Numbers, which are by definition non-negative, their XOR is necessarily non-negative. -

Identity of indiscernibles

distance(x, y) == 0iffx == y: The XOR of two integers is zero iff they are equal. -

Symmetry

distance(x, y) == distance(y, x): XOR is a symmetric operation. -

Subadditivity

distance(x, z) <= distance(x, y) + distance(y, z): follows from associativity, sincex XOR z = x XOR (y XOR y) XOR z = distance(x, y) XOR distance(y, z)which is not greater thandistance(x, y) + distance(y, z).

In addition, XOR has other useful properties:

-

Unidirectionality: given the key

xand the distancedthere exist one and only one keyysuch thatdistance(x, y) = d.The implication is that repeated lookups are likely to pass along the same way and thus caching makes sense.

Source: maymounkov-kademlia

Example: Given three nodes with keys 2, 5, and 6:

-

2 XOR 5 = 7 -

2 XOR 6 = 4 -

5 XOR 2 = 7 -

5 XOR 6 = 3 -

6 XOR 2 = 4 -

6 XOR 5 = 3

The closest node from both 2 and 5 is 6. The closest node from 6 is 5 with distance 3. This example shows that a key that is close in terms of integer addition may not necessarily be close in terms of XOR.

Client Lists

A Client List of maximum size k with a given public key as base

key is an ordered set of at most k nodes close to the base key. The

elements are sorted by distance from the base key. Thus,

the first (smallest) element of the set is the closest one to the base

key in that set, the last (greatest) element is the furthest away. The

maximum size and base key are constant throughout the lifetime of a

Client List.

A Client List is full when the number of nodes it contains is the maximum size of the list.

A node is viable for entry if the Client List is not full or the node’s public key has a lower distance from the base key than the current entry with the greatest distance.

If a node is viable and the Client List is full, the entry with the greatest distance from the base key is removed to keep the size below the maximum configured size.

Adding a node whose key already exists will result in an update of the Node Info in the Client List. Removing a node for which no Node Info exists in the Client List has no effect. Thus, removing a node twice is permitted and has the same effect as removing it once.

The iteration order of a Client List is in order of distance from the base key. I.e. the first node seen in iteration is the closest, and the last node is the furthest away in terms of the distance metric.

K-buckets

K-buckets is a data structure for efficiently storing a set of nodes close to a certain key called the base key. The base key is constant throughout the lifetime of a k-buckets instance.

A k-buckets is a map from small integers 0 <= n < 256 to Client Lists

of maximum size $k$. Each Client List is called a (k-)bucket. A

k-buckets is equipped with a base key, and each bucket has this key as

its base key. k is called the bucket size. The default bucket size is

- A large bucket size was chosen to increase the speed at which peers are found.

The above number n is the bucket index. It is a non-negative integer

with the range [0, 255], i.e. the range of an 8 bit unsigned integer.

Bucket Index

The index of the bucket can be computed using the following function:

bucketIndex(baseKey, nodeKey) = 255 - log_2(distance(baseKey, nodeKey)).

This function is not defined when baseKey == nodeKey, meaning

k-buckets will never contain a Node Info about the base node.

Thus, each k-bucket contains only Node Infos for whose keys the

following holds: if node with key nodeKey is in k-bucket with index

n, then bucketIndex(baseKey, nodeKey) == n. Thus, n’th k-bucket

consists of nodes for which distance to the base node lies in range

[2^n, 2^(n+1) - 1].

The bucket index can be efficiently computed by determining the first

bit at which the two keys differ, starting from the most significant

bit. So, if the local DHT key starts with e.g. 0x80 and the bucketed

node key starts with 0x40, then the bucket index for that node is 0.

If the second bit differs, the bucket index is 1. If the keys are almost

exactly equal and only the last bit differs, the bucket index is 255.

Manipulating k-buckets

TODO: this is different from kademlia’s least-recently-seen eviction policy; why the existing solution was chosen, how does it affect security, performance and resistance to poisoning? original paper claims that preference of old live nodes results in better persistence and resistance to basic DDoS attacks;

Any update or lookup operation on a k-buckets instance that involves a

single node requires us to first compute the bucket index for that node.

An update involving a Node Info with nodeKey == baseKey has no effect.

If the update results in an empty bucket, that bucket is removed from

the map.

Adding a node to, or removing a node from, a k-buckets consists of performing the corresponding operation on the Client List bucket whose index is that of the node’s public key, except that adding a new node to a full bucket has no effect. A node is considered viable for entry if the corresponding bucket is not full.

Iteration order of a k-buckets instance is in order of distance from the base key. I.e. the first node seen in iteration is the closest, and the last node is the furthest away in terms of the distance metric.

DHT node state

Every DHT node contains the following state:

-

DHT Key Pair: The Key Pair used to communicate with other DHT nodes. It is immutable throughout the lifetime of the DHT node.

-

DHT Close List: A set of Node Infos of nodes that are close to the DHT Public Key (public part of the DHT Key Pair). The Close List is represented as a k-buckets data structure, with the DHT Public Key as the Base Key.

-

DHT Search List: A list of Public Keys of nodes that the DHT node is searching for, associated with a DHT Search Entry.

A DHT node state is initialised using a Key Pair, which is stored in the state as DHT Key Pair and as base key for the Close List. Both the Close and Search Lists are initialised to be empty.

DHT Search Entry

A DHT Search Entry contains a Client List with base key the searched node’s Public Key. Once the searched node is found, it is also stored in the Search Entry.

The maximum size of the Client List is set to 8. (Must be the same or smaller than the bucket size of the close list to make sure all the closest peers found will know the node being searched (TODO(zugz): this argument is unclear.)).

A DHT node state therefore contains one Client List for each bucket index in the Close List, and one Client List for each DHT Search Entry. These lists are not required to be disjoint - a node may be in multiple Client Lists simultaneously.

A Search Entry is initialised with the searched-for Public Key. The contained Client List is initialised to be empty.

Manipulating the DHT node state

Adding a search key to the DHT node state creates an empty entry in the Search Nodes list. If a search entry for the public key already existed, the “add” operation has no effect.

Removing a search key removes its search entry and all associated data structures from memory.

The Close List and the Search Entries are termed the Node Lists of the

DHT State.

The iteration order over the DHT state is to first process the Close List k-buckets, then the Search List entry Client Lists. Each of these follows the iteration order in the corresponding specification.

A node info is considered to be contained in the DHT State if it is contained in the Close List or in at least one of the Search Entries.

The size of the DHT state is defined to be the number of node infos it contains, counted with multiplicity: node infos contained multiple times, e.g. in the close list and in various search entries, are counted as many times as they appear. Search keys do not directly count towards the state size. So the size of the state is the sum of the sizes of the Close List and the Search Entries.

The state size is relevant to later pruning algorithms that decide when to remove a node info and when to request a ping from stale nodes. Search keys, once added, are never automatically pruned.

Adding a Node Info to the state is done by adding the node to each Node List in the state.

When adding a node info to the state, the search entry for the node’s public key, if it exists, is updated to contain the new node info. All k-buckets and Client Lists that already contain the node info will also be updated. See the corresponding specifications for the update algorithms. However, a node info will not be added to a search entry when it is the node to which the search entry is associated (i.e. the node being search for).

Removing a node info from the state removes it from all k-buckets. If a search entry for the removed node’s public key existed, the node info in that search entry is unset. The search entry itself is not removed.

Self-organisation

Self-organising in the DHT occurs through each DHT peer connecting to an arbitrary number of peers closest to their own DHT public key and some that are further away.

If each peer in the network knows the peers with the DHT public key closest to its DHT public key, then to find a specific peer with public key X a peer just needs to recursively ask peers in the DHT for known peers that have the DHT public keys closest to X. Eventually the peer will find the peers in the DHT that are the closest to that peer and, if that peer is online, they will find them.

DHT Packet

The DHT Packet contains the sender’s DHT Public Key, an encryption Nonce, and an encrypted payload. The payload is encrypted with the DHT secret key of the sender, the DHT public key of the receiver, and the nonce that is sent along with the packet. DHT Packets are sent inside Protocol Packets with a varying Packet Kind.

| Length | Type | Contents |

|---|---|---|

32 |

Public Key | Sender DHT Public Key |

24 |

Nonce | Random nonce |

[16,] |

Bytes | Encrypted payload |

The encrypted payload is at least 16 bytes long, because the encryption includes a MAC of 16 bytes. A 16 byte payload would thus be the empty message. The DHT protocol never actually sends empty messages, so in reality the minimum size is 27 bytes for the Ping Packet.

RPC Services

A DHT RPC Service consists of a Request packet and a Response packet. A DHT RPC Packet contains a payload and a Request ID. This ID is a 64 bit unsigned integer that helps identify the response for a given request.

Replies to RPC requests

A reply to a Request packet is a Response packet with the Request ID in the Response packet set equal to the Request ID in the Request packet. A response is accepted if and only if it is the first received reply to a request which was sent sufficiently recently, according to a time limit which depends on the service.

DHT RPC Packets are encrypted and transported within DHT Packets.

| Length | Type | Contents |

|---|---|---|

[0,] |

Bytes | Payload |

8 |

uint64_t |

Request ID |

The minimum payload size is 0, but in reality the smallest sensible payload size is 1. Since the same symmetric key is used in both communication directions, an encrypted Request would be a valid encrypted Response if they contained the same plaintext.

Parts of the protocol using RPC packets must take care to make Request payloads not be valid Response payloads. For instance, Ping Packets carry a boolean flag that indicate whether the payload corresponds to a Request or a Response.

The Request ID provides some resistance against replay attacks. If there were no Request ID, it would be easy for an attacker to replay old responses and thus provide nodes with out-of-date information. A Request ID should be randomly generated for each Request which is sent.

Ping Service

The Ping Service is used to check if a node is responsive.

A Ping Packet payload consists of just a boolean value saying whether it is a request or a response.

The one byte boolean inside the encrypted payload is added to prevent peers from creating a valid Ping Response from a Ping Request without decrypting the packet and encrypting a new one. Since symmetric encryption is used, the encrypted Ping Response would be byte-wise equal to the Ping Request without the discriminator byte.

| Length | Type | Contents |

|---|---|---|

1 |

Bool | Response flag: 0x00 for Request, 0x01 for Response |

Ping Request (0x00)

A Ping Request is a Ping Packet with the response flag set to False. When a Ping Request is received and successfully decrypted, a Ping Response packet is created and sent back to the requestor.

Ping Response (0x01)

A Ping Response is a Ping Packet with the response flag set to True.

Nodes Service

The Nodes Service is used to query another DHT node for up to 4 nodes they know that are the closest to a requested node.

The DHT Nodes RPC service uses the Packed Node Format.

Only the UDP Protocol (IP Type 2 and 10) is used in the DHT module

when sending nodes with the packed node format. This is because the TCP

Protocol is used to send TCP relay information and the DHT is UDP only.

Nodes Request (0x02)

| Length | Type | Contents |

|---|---|---|

32 |

Public Key | Requested DHT Public Key |

The DHT Public Key sent in the request is the one the sender is searching for.

Nodes Response (0x04)

| Length | Type | Contents |

|---|---|---|

1 |

Int | Number of nodes in the response (maximum 4) |

[39, 204] |

Node Infos | Nodes in Packed Node Format |

An IPv4 node is 39 bytes, an IPv6 node is 51 bytes, so the maximum size

of the packed Node Infos is 51 * 4 = 204 bytes.

Nodes responses should contain the 4 closest nodes that the sender of the response has in their lists of known nodes.

DHT Operation

DHT Initialisation

A new DHT node is initialised with a DHT State with a fresh random key pair, an empty close list, and a search list containing 2 empty search entries searching for the public keys of fresh random key pairs.

Periodic sending of Nodes Requests

For each Nodes List in the DHT State, every 20 seconds we send a Nodes Request to a random node on the list, searching for the base key of the list.

When a Nodes List first becomes populated with nodes, we send 5 such random Nodes Requests in quick succession.

Random nodes are chosen since being able to predict which node a node will send a request to next could make some attacks that disrupt the network easier, as it adds a possible attack vector.

Furthermore, we periodically check every node for responsiveness by sending it a Nodes Request: for each Nodes List in the DHT State, we send each node on the list a Nodes Request every 60 seconds, searching for the base key of the list. We remove from the DHT State any node from which we persistently fail to receive Nodes Responses.

c-toxcore’s implementation of checking and timeouts: A Last Checked time is maintained for each node in each list. When a node is added to a list, if doing so evicts a node from the list then the Last Checked time is set to that of the evicted node, and otherwise it is set to 0. This includes updating an already present node. Nodes from which we have not received a Nodes Response for 122 seconds are considered Bad; they remain in the DHT State, but are preferentially overwritten when adding to the DHT State, and are ignored for all operations except the once-per-60s checking described above. If we have not received a Nodes Response for 182 seconds, the node is not even checked. So one check is sent after the node becomes Bad. In the special case that every node in the Close List is Bad, they are all checked once more.)

hs-toxcore implementation of checking and timeouts: We maintain a Last Checked timestamp and a Checks Counter on each node on each Nodes List in the Dht State. When a node is added to a list, these are set respectively to the current time and to 0. This includes updating an already present node. We periodically pass through the nodes on the lists, and for each which is due a check, we: check it, update the timestamp, increment the counter, and, if the counter is then 2, remove the node from the list. This is pretty close to the behaviour of c-toxcore, but much simpler. TODO: currently hs-toxcore doesn’t do anything to try to recover if the Close List becomes empty. We could maintain a separate list of the most recently heard from nodes, and repopulate the Close List with that if the Close List becomes empty.

Handling Nodes Response packets

When we receive a valid Nodes Response packet, we first check that it is a reply to a Nodes Request which we sent within the last 60 seconds to the node from which we received the response, and that no previous reply has been received. If this check fails, the packet is ignored. If the check succeeds, first we add to the DHT State the node from which the response was sent. Then, for each node listed in the response and for each Nodes List in the DHT State which does not currently contain the node and to which the node is viable for entry, we send a Nodes Request to the node with the requested public key being the base key of the Nodes List.

An implementation may choose not to send every such Nodes Request. (c-toxcore only sends so many per list (8 for the Close List, 4 for a Search Entry) per 50ms, prioritising the closest to the base key).

Handling Nodes Request packets

When we receive a Nodes Request packet from another node, we reply with a Nodes Response packet containing the 4 nodes in the DHT State which are the closest to the public key in the packet. If there are fewer than 4 nodes in the state, we reply with all the nodes in the state. If there are no nodes in the state, no reply is sent.

We also send a Ping Request when this is appropriate; see below.

Handling Ping Request packets

When a valid Ping Request packet is received, we reply with a Ping Response.

We also send a Ping Request when this is appropriate; see below.

Handling Ping Response packets

When we receive a valid Ping Response packet, we first check that it is a reply to a Ping Request which we sent within the last 5 seconds to the node from which we received the response, and that no previous reply has been received. If this check fails, the packet is ignored. If the check succeeds, we add to the DHT State the node from which the response was sent.

Sending Ping Requests

When we receive a Nodes Request or a Ping Request, in addition to the handling described above, we sometimes send a Ping Request. Namely, we send a Ping Request to the node which sent the packet if the node is viable for entry to the Close List and is not already in the Close List. An implementation may (TODO: should?) choose not to send every such Ping Request. (c-toxcore sends at most 32 every 2 seconds, preferring closer nodes.)

DHT Request Packets

DHT Request packets are used to route encrypted data from a sender to another node, referred to as the addressee of the packet, via a third node.

A DHT Request Packet is sent as the payload of a Protocol Packet with the corresponding Packet Kind. It contains the DHT Public Key of an addressee, and a DHT Packet which is to be received by the addressee.

| Length | Type | Contents |

|---|---|---|

32 |

Public Key | Addressee DHT Public Key |

[72,] |

DHT Packet | DHT Packet |

Handling DHT Request packets

A DHT node that receives a DHT request packet checks whether the addressee public key is their DHT public key. If it is, they will decrypt and handle the packet. Otherwise, they will check whether the addressee DHT public key is the DHT public key of one of the nodes in their Close List. If it isn’t, they will drop the packet. If it is they will resend the packet, unaltered, to that DHT node.

DHT request packets are used for DHT public key packets (see onion) and NAT ping packets.

NAT ping packets

A NAT ping packet is sent as the payload of a DHT request packet.

We use NAT ping packets to see if a friend we are not connected to directly is online and ready to do the hole punching.

NAT ping request

| Length | Contents |

|---|---|

1 |

uint8_t (0xfe) |

1 |

uint8_t (0x00) |

8 |

uint64_t random number |

NAT ping response

| Length | Contents |

|---|---|

1 |

uint8_t (0xfe) |

1 |

uint8_t (0x01) |

8 |

uint64_t random number (the same that was received in request) |

TODO: handling these packets.

Effects of chosen constants on performance

If the bucket size of the k-buckets were increased, it would increase the amount of packets needed to check if each node is still alive, which would increase the bandwidth usage, but reliability would go up. If the number of nodes were decreased, reliability would go down along with bandwidth usage. The reason for this relationship between reliability and number of nodes is that if we assume that not every node has its UDP ports open or is behind a cone NAT it means that each of these nodes must be able to store a certain number of nodes behind restrictive NATs in order for others to be able to find those nodes behind restrictive NATs. For example if 7/8 nodes were behind restrictive NATs, using 8 nodes would not be enough because the chances of some of these nodes being impossible to find in the network would be too high.

TODO(zugz): this seems a rather wasteful solution to this problem.

If the ping timeouts and delays between pings were higher it would decrease the bandwidth usage but increase the amount of disconnected nodes that are still being stored in the lists. Decreasing these delays would do the opposite.

If the maximum size 8 of the DHT Search Entry Client Lists were increased would increase the bandwidth usage, might increase hole punching efficiency on symmetric NATs (more ports to guess from, see Hole punching) and might increase the reliability. Lowering this number would have the opposite effect.

The timeouts and number of nodes in lists for toxcore were picked by feeling alone and are probably not the best values. This also applies to the behavior which is simple and should be improved in order to make the network resist better to sybil attacks.

TODO: consider giving min and max values for the constants.

NATs

We assume that peers are either directly accessible or are behind one of 3 types of NAT:

Cone NATs: Assign one whole port to each UDP socket behind the NAT; any packet from any IP/port sent to that assigned port from the internet will be forwarded to the socket behind it.

Restricted Cone NATs: Assign one whole port to each UDP socket behind the NAT. However, it will only forward packets from IPs that the UDP socket has sent a packet to.

Symmetric NATs: The worst kind of NAT, they assign a new port for each

IP/port a packet is sent to. They treat each new peer you send a UDP

packet to as a ’connection’ and will only forward packets from the

IP/port of that ’connection’.

Hole punching

Holepunching on normal cone NATs is achieved simply through the way in which the DHT functions.

If more than half of the 8 peers closest to the friend in the DHT return an IP/port for the friend and we send a ping request to each of the returned IP/ports but get no response. If we have sent 4 ping requests to 4 IP/ports that supposedly belong to the friend and get no response, then this is enough for toxcore to start the hole punching. The numbers 8 and 4 are used in toxcore and were chosen based on feel alone and so may not be the best numbers.

Before starting the hole punching, the peer will send a NAT ping packet to the friend via the peers that say they know the friend. If a NAT ping response with the same random number is received the hole punching will start.

If a NAT ping request is received, we will first check if it is from a friend. If it is not from a friend it will be dropped. If it is from a friend, a response with the same 8 byte number as in the request will be sent back via the nodes that know the friend sending the request. If no nodes from the friend are known, the packet will be dropped.

Receiving a NAT ping response therefore means that the friend is both online and actively searching for us, as that is the only way they would know nodes that know us. This is important because hole punching will work only if the friend is actively trying to connect to us.

NAT ping requests are sent every 3 seconds in toxcore, if no response is received for 6 seconds, the hole punching will stop. Sending them in longer intervals might increase the possibility of the other node going offline and ping packets sent in the hole punching being sent to a dead peer but decrease bandwidth usage. Decreasing the intervals will have the opposite effect.

There are 2 cases that toxcore handles for the hole punching. The first case is if each 4+ peers returned the same IP and port. The second is if the 4+ peers returned same IPs but different ports.

A third case that may occur is the peers returning different IPs and ports. This can only happen if the friend is behind a very restrictive NAT that cannot be hole punched or if the peer recently connected to another internet connection and some peers still have the old one stored. Since there is nothing we can do for the first option it is recommended to just use the most common IP returned by the peers and to ignore the other IP/ports.

In the case where the peers return the same IP and port it means that the other friend is on a restricted cone NAT. These kinds of NATs can be hole punched by getting the friend to send a packet to our public IP/port. This means that hole punching can be achieved easily and that we should just continue sending DHT ping packets regularly to that IP/port until we get a ping response. This will work because the friend is searching for us in the DHT and will find us and will send us a packet to our public IP/port (or try to with the hole punching), thereby establishing a connection.

For the case where peers do not return the same ports, this means that the other peer is on a symmetric NAT. Some symmetric NATs open ports in sequences so the ports returned by the other peers might be something like: 1345, 1347, 1389, 1395. The method to hole punch these NATs is to try to guess which ports are more likely to be used by the other peer when they try sending us ping requests and send some ping requests to these ports. Toxcore just tries all the ports beside each returned port (ex: for the 4 ports previously it would try: 1345, 1347, 1389, 1395, 1346, 1348, 1390, 1396, 1344, 1346…) getting gradually further and further away and, although this works, the method could be improved. When using this method toxcore will try up to 48 ports every 3 seconds until both connect. After 5 tries toxcore doubles this and starts trying ports from 1024 (48 each time) along with the previous port guessing. This is because I have noticed that this seemed to fix it for some symmetric NATs, most likely because a lot of them restart their count at 1024.

Increasing the amount of ports tried per second would make the hole punching go faster but might DoS NATs due to the large number of packets being sent to different IPs in a short amount of time. Decreasing it would make the hole punching slower.

This works in cases where both peers have different NATs. For example, if A and B are trying to connect to each other: A has a symmetric NAT and B a restricted cone NAT. A will detect that B has a restricted cone NAT and keep sending ping packets to his one IP/port. B will detect that A has a symmetric NAT and will send packets to it to try guessing his ports. If B manages to guess the port A is sending packets from they will connect together.

DHT Bootstrap Info (0xf0)

Bootstrap nodes are regular Tox nodes with a stable DHT public key. This means the DHT public key does not change across restarts. DHT bootstrap nodes have one additional request kind: Bootstrap Info. The request is simply a packet of length 78 bytes where the first byte is 0xf0. The other bytes are ignored.

The response format is as follows:

| Length | Type | Contents |

|---|---|---|

4 |

Word32 | Bootstrap node version |

256 |

Bytes | Message of the day |

LAN discovery

LAN discovery is a way to discover Tox peers that are on a local network. If two Tox friends are on a local network, the most efficient way for them to communicate together is to use the local network. If a Tox client is opened on a local network in which another Tox client exists then good behavior would be to bootstrap to the network using the Tox client on the local network. This is what LAN discovery aims to accomplish.

LAN discovery works by sending a UDP packet through the toxcore UDP socket to the interface broadcast address on IPv4, the global broadcast address (255.255.255.255) and the multicast address on IPv6 (FF02::1) on the default Tox UDP port (33445).

The LAN Discovery packet:

| Length | Contents |

|---|---|

1 |

uint8_t (33) |

32 |

DHT public key |

LAN Discovery packets contain the DHT public key of the sender. When a LAN Discovery packet is received, a DHT get nodes packet will be sent to the sender of the packet. This means that the DHT instance will bootstrap itself to every peer from which it receives one of these packets. Through this mechanism, Tox clients will bootstrap themselves automatically from other Tox clients running on the local network.

When enabled, toxcore sends these packets every 10 seconds to keep delays low. The packets could be sent up to every 60 seconds but this would make peer finding over the network 6 times slower.

LAN discovery enables two friends on a local network to find each other as the DHT prioritizes LAN addresses over non LAN addresses for DHT peers. Sending a get node request/bootstrapping from a peer successfully should also add them to the list of DHT peers if we are searching for them. The peer must not be immediately added if a LAN discovery packet with a DHT public key that we are searching for is received as there is no cryptographic proof that this packet is legitimate and not maliciously crafted. This means that a DHT get node or ping packet must be sent, and a valid response must be received, before we can say that this peer has been found.

LAN discovery is how Tox handles and makes everything work well on LAN.

Messenger

Messenger is the module at the top of all the other modules. It sits on

top of friend_connection in the hierarchy of toxcore.

Messenger takes care of sending and receiving messages using the

connection provided by friend_connection. The module provides a way

for friends to connect and makes it usable as an instant messenger. For

example, Messenger lets users set a nickname and status message which it

then transmits to friends when they are online. It also allows users to

send messages to friends and builds an instant messenging system on top

of the lower level friend_connection module.

Messenger offers two methods to add a friend. The first way is to add a

friend with only their long term public key, this is used when a friend

needs to be added but for some reason a friend request should not be

sent. The friend should only be added. This method is most commonly used

to accept friend requests but could also be used in other ways. If two

friends add each other using this function they will connect to each

other. Adding a friend using this method just adds the friend to

friend_connection and creates a new friend entry in Messenger for the

friend.

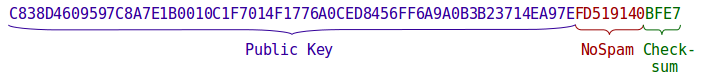

The Tox ID is used to identify peers so that they can be added as

friends in Tox. In order to add a friend, a Tox user must have the

friend’s Tox ID. The Tox ID contains the long term public key of the

peer (32 bytes) followed by the 4 byte nospam (see: friend_requests)

value and a 2 byte XOR checksum. The method of sending the Tox ID to

others is up to the user and the client but the recommended way is to

encode it in hexadecimal format and have the user manually send it to

the friend using another program.

Tox ID:

| Length | Contents |

|---|---|

32 |

long term public key |

4 |

nospam |

2 |

checksum |

The checksum is calculated by XORing the first two bytes of the ID with the next two bytes, then the next two bytes until all the 36 bytes have been XORed together. The result is then appended to the end to form the Tox ID.

The user must make sure the Tox ID is not intercepted and replaced in transit by a different Tox ID, which would mean the friend would connect to a malicious person instead of the user, though taking reasonable precautions as this is outside the scope of Tox. Tox assumes that the user has ensured that they are using the correct Tox ID, belonging to the intended person, to add a friend.

The second method to add a friend is by using their Tox ID and a message to be sent in a friend request. This way of adding friends will try to send a friend request, with the set message, to the peer whose Tox ID was added. The method is similar to the first one, except that a friend request is crafted and sent to the other peer.

When a friend connection associated to a Messenger friend goes online, a ONLINE packet will be sent to them. Friends are only set as online if an ONLINE packet is received.

As soon as a friend goes online, Messenger will stop sending friend requests to that friend, if it was sending them, as they are redundant for this friend.

Friends will be set as offline if either the friend connection associated to them goes offline or if an OFFLINE packet is received from the friend.

Messenger packets are sent to the friend using the online friend connection to the friend.

Should Messenger need to check whether any of the non lossy packets in

the following list were received by the friend, for example to implement

receipts for text messages, net_crypto can be used. The net_crypto

packet number, used to send the packets, should be noted and then

net_crypto checked later to see if the bottom of the send array is

after this packet number. If it is, then the friend has received them.

Note that net_crypto packet numbers could overflow after a long time,

so checks should happen within 2**32 net_crypto packets sent with

the same friend connection.

Message receipts for action messages and normal text messages are

implemented by adding the net_crypto packet number of each message,

along with the receipt number, to the top of a linked list that each

friend has as they are sent. Every Messenger loop, the entries are read

from the bottom and entries are removed and passed to the client until

an entry that refers to a packet not yet received by the other is

reached, when this happens it stops.

List of Messenger packets:

ONLINE

length: 1 byte

| Length | Contents |

|---|---|

1 |

uint8_t (0x18) |

Sent to a friend when a connection is established to tell them to mark

us as online in their friends list. This packet and the OFFLINE packet

are necessary as friend_connections can be established with

non-friends who are part of a groupchat. The two packets are used to

differentiate between these peers, connected to the user through

groupchats, and actual friends who ought to be marked as online in the

friendlist.

On receiving this packet, Messenger will show the peer as being online.

OFFLINE

length: 1 byte

| Length | Contents |

|---|---|

1 |

uint8_t (0x19) |

Sent to a friend when deleting the friend. Prevents a deleted friend from seeing us as online if we are connected to them because of a group chat.

On receiving this packet, Messenger will show this peer as offline.

NICKNAME

length: 1 byte to 129 bytes.

| Length | Contents |

|---|---|

1 |

uint8_t (0x30) |

[0, 128] |

Nickname as a UTF8 byte string |

Used to send the nickname of the peer to others. This packet should be sent every time to each friend every time they come online and each time the nickname is changed.

STATUSMESSAGE

length: 1 byte to 1008 bytes.

| Length | Contents |

|---|---|

1 |

uint8_t (0x31) |

[0, 1007] |

Status message as a UTF8 byte string |

Used to send the status message of the peer to others. This packet should be sent every time to each friend every time they come online and each time the status message is changed.

USERSTATUS

length: 2 bytes

| Length | Contents |

|---|---|

1 |

uint8_t (0x32) |

1 |

uint8_t status (0 = online, 1 = away, 2 = busy) |

Used to send the user status of the peer to others. This packet should be sent every time to each friend every time they come online and each time the user status is changed.

TYPING

length: 2 bytes

| Length | Contents |

|---|---|

1 |

uint8_t (0x33) |

1 |

uint8_t typing status (0 = not typing, 1 = typing) |

Used to tell a friend whether the user is currently typing or not.

MESSAGE

| Length | Contents |

|---|---|

1 |

uint8_t (0x40) |

[0, 1372] |

Message as a UTF8 byte string |

Used to send a normal text message to the friend.

ACTION

| Length | Contents |

|---|---|

1 |

uint8_t (0x41) |

[0, 1372] |

Action message as a UTF8 byte string |

Used to send an action message (like an IRC action) to the friend.

MSI

| Length | Contents |

|---|---|

1 |

uint8_t (0x45) |

? |

data |

Reserved for Tox AV usage.

File Transfer Related Packets

FILE_SENDREQUEST

| Length | Contents |

|---|---|

1 |

uint8_t (0x50) |

1 |

uint8_t file number |

4 |

uint32_t file type |

8 |

uint64_t file size |

32 |

file id (32 bytes) |

[0, 255] |

filename as a UTF8 byte string |

Note that file type and file size are sent in big endian/network byte format.

FILE_CONTROL

length: 4 bytes if control_type isn’t seek. 12 bytes if control_type

is seek.

| Length | Contents |

|---|---|

1 |

uint8_t (0x51) |

1 |

uint8_t send_receive |

1 |

uint8_t file number |

1 |

uint8_t control_type |

8 |

uint64_t seek parameter |

send_receive is 0 if the control targets a file being sent (by the

peer sending the file control), and 1 if it targets a file being

received.

control_type can be one of: 0 = accept, 1 = pause, 2 = kill, 3 = seek.

The seek parameter is only included when control_type is seek (3).

Note that if it is included the seek parameter will be sent in big endian/network byte format.

FILE_DATA

length: 2 to 1373 bytes.

| Length | Contents |

|---|---|

1 |

uint8_t (0x52) |

1 |

uint8_t file number |

[0, 1371] |

file data piece |

Files are transferred in Tox using File transfers.

To initiate a file transfer, the friend creates and sends a

FILE_SENDREQUEST packet to the friend it wants to initiate a file

transfer to.

The first part of the FILE_SENDREQUEST packet is the file number. The

file number is the number used to identify this file transfer. As the

file number is represented by a 1 byte number, the maximum amount of

concurrent files Tox can send to a friend is 256. 256 file transfers per

friend is enough that clients can use tricks like queueing files if

there are more files needing to be sent.

256 outgoing files per friend means that there is a maximum of 512 concurrent file transfers, between two users, if both incoming and outgoing file transfers are counted together.

As file numbers are used to identify the file transfer, the Tox instance

must make sure to use a file number that isn’t used for another outgoing

file transfer to that same friend when creating a new outgoing file

transfer. File numbers are chosen by the file sender and stay unchanged

for the entire duration of the file transfer. The file number is used by

both FILE_CONTROL and FILE_DATA packets to identify which file

transfer these packets are for.

The second part of the file transfer request is the file type. This is simply a number that identifies the type of file. for example, tox.h defines the file type 0 as being a normal file and type 1 as being an avatar meaning the Tox client should use that file as an avatar. The file type does not effect in any way how the file is transfered or the behavior of the file transfer. It is set by the Tox client that creates the file transfers and send to the friend untouched.

The file size indicates the total size of the file that will be

transfered. A file size of UINT64_MAX (maximum value in a uint64_t)

means that the size of the file is undetermined or unknown. For example

if someone wanted to use Tox file transfers to stream data they would

set the file size to UINT64_MAX. A file size of 0 is valid and behaves

exactly like a normal file transfer.

The file id is 32 bytes that can be used to uniquely identify the file transfer. For example, avatar transfers use it as the hash of the avatar so that the receiver can check if they already have the avatar for a friend which saves bandwidth. It is also used to identify broken file transfers across toxcore restarts (for more info see the file transfer section of tox.h). The file transfer implementation does not care about what the file id is, as it is only used by things above it.

The last part of the file transfer is the optional file name which is used to tell the receiver the name of the file.

When a FILE_SENDREQUEST packet is received, the implementation

validates and sends the info to the Tox client which decides whether

they should accept the file transfer or not.

To refuse or cancel a file transfer, they will send a FILE_CONTROL

packet with control_type 2 (kill).

FILE_CONTROL packets are used to control the file transfer.

FILE_CONTROL packets are used to accept/unpause, pause, kill/cancel

and seek file transfers. The control_type parameter denotes what the

file control packet does.

The send_receive and file number are used to identify a specific file

transfer. Since file numbers for outgoing and incoming files are not

related to each other, the send_receive parameter is used to identify

if the file number belongs to files being sent or files being received.

If send_receive is 0, the file number corresponds to a file being sent

by the user sending the file control packet. If send_receive is 1, it

corresponds to a file being received by the user sending the file

control packet.

control_type indicates the purpose of the FILE_CONTROL packet.

control_type of 0 means that the FILE_CONTROL packet is used to tell

the friend that the file transfer is accepted or that we are unpausing a

previously paused (by us) file transfer. control_type of 1 is used to

tell the other to pause the file transfer.

If one party pauses a file transfer, that party must be the one to

unpause it. Should both sides pause a file transfer, both sides must

unpause it before the file can be resumed. For example, if the sender

pauses the file transfer, the receiver must not be able to unpause it.

To unpause a file transfer, control_type 0 is used. Files can only be

paused when they are in progress and have been accepted.

control_type 2 is used to kill, cancel or refuse a file transfer. When

a FILE_CONTROL is received, the targeted file transfer is considered

dead, will immediately be wiped and its file number can be reused. The

peer sending the FILE_CONTROL must also wipe the targeted file

transfer from their side. This control type can be used by both sides of

the transfer at any time.

control_type 3, the seek control type is used to tell the sender of

the file to start sending from a different index in the file than 0. It

can only be used right after receiving a FILE_SENDREQUEST packet and

before accepting the file by sending a FILE_CONTROL with

control_type 0. When this control_type is used, an extra 8 byte

number in big endian format is appended to the FILE_CONTROL that is

not present with other control types. This number indicates the index in

bytes from the beginning of the file at which the file sender should

start sending the file. The goal of this control type is to ensure that

files can be resumed across core restarts. Tox clients can know if they

have received a part of a file by using the file id and then using this

packet to tell the other side to start sending from the last received

byte. If the seek position is bigger or equal to the size of the file,

the seek packet is invalid and the one receiving it will discard it.

To accept a file Tox will therefore send a seek packet, if it is needed,

and then send a FILE_CONTROL packet with control_type 0 (accept) to

tell the file sender that the file was accepted.

Once the file transfer is accepted, the file sender will start sending

file data in sequential chunks from the beginning of the file (or the

position from the FILE_CONTROL seek packet if one was received).

File data is sent using FILE_DATA packets. The file number corresponds

to the file transfer that the file chunks belong to. The receiver

assumes that the file transfer is over as soon as a chunk with the file

data size not equal to the maximum size (1371 bytes) is received. This

is how the sender tells the receiver that the file transfer is complete

in file transfers where the size of the file is unknown (set to

UINT64_MAX). The receiver also assumes that if the amount of received

data equals to the file size received in the FILE_SENDREQUEST, the

file sending is finished and has been successfully received. Immediately

after this occurs, the receiver frees up the file number so that a new

incoming file transfer can use that file number. The implementation

should discard any extra data received which is larger than the file

size received at the beginning.

In 0 filesize file transfers, the sender will send one FILE_DATA

packet with a file data size of 0.

The sender will know if the receiver has received the file successfully

by checking if the friend has received the last FILE_DATA packet sent

(containing the last chunk of the file). net_crypto can be used to

check whether packets sent through it have been received by storing the

packet number of the sent packet and verifying later in net_crypto to

see whether it was received or not. As soon as net_crypto says the

other received the packet, the file transfer is considered successful,

wiped and the file number can be reused to send new files.

FILE_DATA packets should be sent as fast as the net_crypto

connection can handle it respecting its congestion control.

If the friend goes offline, all file transfers are cleared in toxcore. This makes it simpler for toxcore as it does not have to deal with resuming file transfers. It also makes it simpler for clients as the method for resuming file transfers remains the same, even if the client is restarted or toxcore loses the connection to the friend because of a bad internet connection.

Group Chat Related Packets

| Packet ID | Packet Name |

|---|---|

| 0x60 | INVITE_GROUPCHAT |

| 0x61 | ONLINE_PACKET |

| 0x62 | DIRECT_GROUPCHAT |

| 0x63 | MESSAGE_GROUPCHAT |

| 0xC7 | LOSSY_GROUPCHAT |

Messenger also takes care of saving the friends list and other friend information so that it’s possible to close and start toxcore while keeping all your friends, your long term key and the information necessary to reconnect to the network.

Important information messenger stores includes: the long term private key, our current nospam value, our friends’ public keys and any friend requests the user is currently sending. The network DHT nodes, TCP relays and some onion nodes are stored to aid reconnection.

In addition to this, a lot of optional data can be stored such as the usernames of friends, our current username, status messages of friends, our status message, etc… can be stored. The exact format of the toxcore save is explained later.

The TCP server is run from the toxcore messenger module if the client has enabled it. TCP server is usually run independently as part of the bootstrap node package but it can be enabled in clients. If it is enabled in toxcore, Messenger will add the running TCP server to the TCP relay.

Messenger is the module that transforms code that can connect to friends based on public key into a real instant messenger.

TCP client

TCP client is the client for the TCP server. It establishes and keeps

a connection to the TCP server open.

All the packet formats are explained in detail in TCP server so this

section will only cover TCP client specific details which are not

covered in the TCP server documentation.

TCP clients can choose to connect to TCP servers through a proxy. Most common types of proxies (SOCKS, HTTP) work by establishing a connection through a proxy using the protocol of that specific type of proxy. After the connection through that proxy to a TCP server is established, the socket behaves from the point of view of the application exactly like a TCP socket that connects directly to a TCP server instance. This means supporting proxies is easy.

TCP client first establishes a TCP connection, either through a proxy

or directly to a TCP server. It uses the DHT public key as its long term

key when connecting to the TCP server.

It establishes a secure connection to the TCP server. After establishing a connection to the TCP server, and when the handshake response has been received from the TCP server, the toxcore implementation immediately sends a ping packet. Ideally the first packets sent would be routing request packets but this solution aids code simplicity and allows the server to confirm the connection.

Ping packets, like all other data packets, are sent as encrypted packets.

Ping packets are sent by the toxcore TCP client every 30 seconds with a timeout of 10 seconds, the same interval and timeout as toxcore TCP server ping packets. They are the same because they accomplish the same thing.

TCP client must have a mechanism to make sure important packets

(routing requests, disconnection notifications, ping packets, ping

response packets) don’t get dropped because the TCP socket is full.

Should this happen, the TCP client must save these packets and

prioritize sending them, in order, when the TCP socket on the server

becomes available for writing again. TCP client must also take into

account that packets might be bigger than the number of bytes it can

currently write to the socket. In this case, it must save the bytes of

the packet that it didn’t write to the socket and write them to the

socket as soon as the socket allows so that the connection does not get

broken. It must also assume that it may receive only part of an

encrypted packet. If this occurs it must save the part of the packet it

has received and wait for the rest of the packet to arrive before

handling it.

TCP client can be used to open up a route to friends who are connected

to the TCP server. This is done by sending a routing request to the TCP

server with the DHT public key of the friend. This tells the server to

register a connection_id to the DHT public key sent in the packet. The

server will then respond with a routing response packet. If the

connection was accepted, the TCP client will store the connection id

for this connection. The TCP client will make sure that routing

response packets are responses to a routing packet that it sent by

storing that it sent a routing packet to that public key and checking

the response against it. This prevents the possibility of a bad TCP

server exploiting the client.

The TCP client will handle connection notifications and disconnection

notifications by alerting the module using it that the connection to the

peer is up or down.

TCP client will send a disconnection notification to kill a connection

to a friend. It must send a disconnection notification packet regardless

of whether the peer was online or offline so that the TCP server will

unregister the connection.

Data to friends can be sent through the TCP relay using OOB (out of band) packets and connected connections. To send an OOB packet, the DHT public key of the friend must be known. OOB packets are sent in blind and there is no way to query the TCP relay to see if the friend is connected before sending one. OOB packets should be sent when the connection to the friend via the TCP relay isn’t in an connected state but it is known that the friend is connected to that relay. If the friend is connected via the TCP relay, then normal data packets must be sent as they are smaller than OOB packets.

OOB recv and data packets must be handled and passed to the module using it.

TCP connections

TCP_connections takes care of handling multiple TCP client instances

to establish a reliable connection via TCP relays to a friend.

Connecting to a friend with only one relay would not be very reliable,

so TCP_connections provides the level of abstraction needed to manage

multiple relays. For example, it ensures that if a relay goes down, the

connection to the peer will not be impacted. This is done by connecting

to the other peer with more than one relay.

TCP_connections is above TCP client and below

net_crypto.

A TCP connection in TCP_connections is defined as a connection to a

peer though one or more TCP relays. To connect to another peer with

TCP_connections, a connection in TCP_connections to the peer with

DHT public key X will be created. Some TCP relays which we know the peer

is connected to will then be associated with that peer. If the peer

isn’t connected directly yet, these relays will be the ones that the

peer has sent to us via the onion module. The peer will also send some

relays it is directly connected to once a connection is established,

however, this is done by another module.

TCP_connections has a list of all relays it is connected to. It tries

to keep the number of relays it is connected to as small as possible in

order to minimize load on relays and lower bandwidth usage for the

client. The desired number of TCP relay connections per peer is set to 3

in toxcore with the maximum number set to 6. The reason for these

numbers is that 1 would mean no backup relays and 2 would mean only 1

backup. To be sure that the connection is reliable 3 seems to be a

reasonable lower bound. The maximum number of 6 is the maximum number of

relays that can be tied to each peer. If 2 peers are connected each to

the same 6+ relays and they both need to be connected to that amount of

relays because of other friends this is where this maximum comes into

play. There is no reason why this number is 6 but in toxcore it has to

be at least double than the desired number (3) because the code assumes

this.

If necessary, TCP_connections will connect to TCP relays to use them

to send onion packets. This is only done if there is no UDP connection

to the network. When there is a UDP connection, packets are sent with

UDP only because sending them with TCP relays can be less reliable. It

is also important that we are connected at all times to some relays as

these relays will be used by TCP only peers to initiate a connection to

us.

In toxcore, each client is connected to 3 relays even if there are no TCP peers and the onion is not needed. It might be optimal to only connect to these relays when toxcore is initializing as this is the only time when peers will connect to us via TCP relays we are connected to. Due to how the onion works, after the initialization phase, where each peer is searched in the onion and then if they are found the info required to connect back (DHT pk, TCP relays) is sent to them, there should be no more peers connecting to us via TCP relays. This may be a way to further reduce load on TCP relays, however, more research is needed before it is implemented.

TCP_connections picks one relay and uses only it for sending data to

the other peer. The reason for not picking a random connected relay for

each packet is that it severely deteriorates the quality of the link

between two peers and makes performance of lossy video and audio

transmissions really poor. For this reason, one relay is picked and used

to send all data. If for any reason no more data can be sent through

that relay, the next relay is used. This may happen if the TCP socket is

full and so the relay should not necessarily be dropped if this occurs.

Relays are only dropped if they time out or if they become useless (if

the relay is one too many or is no longer being used to relay data to

any peers).

TCP_connections in toxcore also contains a mechanism to make

connections go to sleep. TCP connections to other peers may be put to

sleep if the connection to the peer establishes itself with UDP after

the connection is established with TCP. UDP is the method preferred by

net_crypto to communicate with other peers. In order to keep track of

the relays which were used to connect with the other peer in case the

UDP connection fails, they are saved by TCP_connections when the

connection is put to sleep. Any relays which were only used by this

redundant connection are saved then disconnected from. If the connection